The AI Absorption Has Begun

AI Security captures most of the headlines in the cyber industry, but, surprisingly, it's not capturing most of the money.

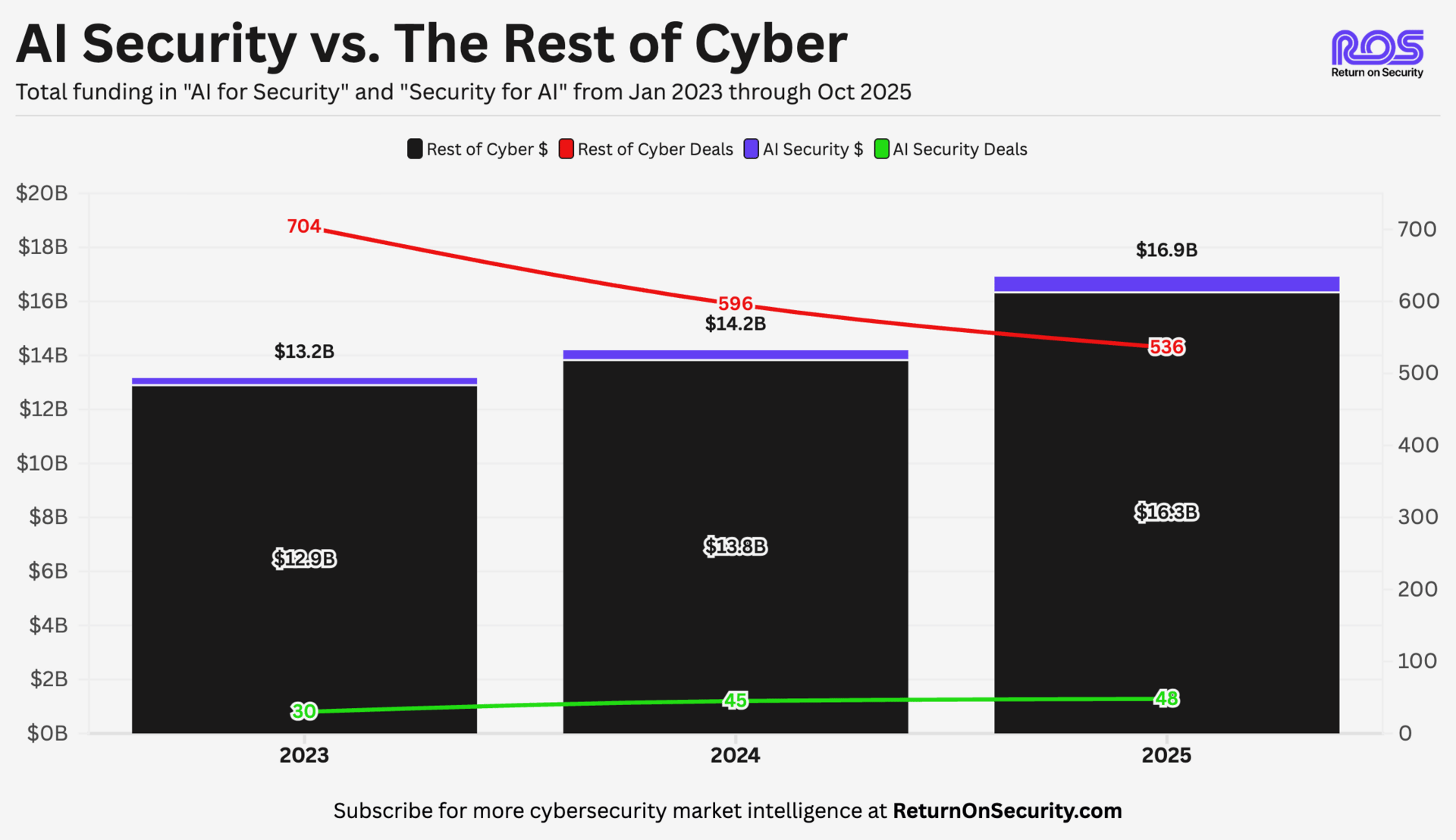

Looking at Return on Security data from the last three years shows a consistent trend:

AI Security is the loud minority of cyber, and the lines defining what makes a company "AI Security" are blurring fast.

The Data

Year | AI Security $ | AI Security Deals | All Others $ | All Other Deals |

|---|---|---|---|---|

2023 | $264M | 30 | $12.9B | 704 |

2024 | $363M | 45 | $13.8B | 596 |

2025 | $582M | 48 | $16.3B | 536 |

Table data is from Jan 2023 to Oct 2025

Everyone says AI Security is transforming cybersecurity. But if it's really a revolution, why is it only 9% of deals and 3% of funding?

The answer: We're not witnessing a revolution. We're watching an absorption.

We're not witnessing a revolution in the typical sense of the word, but a foundational technology addition. Sadly, saying, "The [absorption] will be televised" doesn't quite have the same ring to it.

Chart data is from Jan 2023 to Oct 2025

"AI Security" is being absorbed and integrated into every existing category at roughly the rate AI is being adopted into business operations.

The AI Security Umbrella

For context (see what I did there?), let’s break the "AI Security" umbrella down into a few parts:

AI for Security

Using AI to improve security

AI Governance: Platforms for cataloging, assessing, and mitigating risks in AI applications, emphasizing safety, compliance, responsible use, and security.

AI Privacy Assurance: Platforms safeguarding sensitive data and personal information used in AI processes, ensuring the responsible use of AI.

Security for AI

Protecting AI systems

AI Adversary Simulation: Software simulating real-world threat actors targeting deployed AI models through adversarial examples, prompt injections, or model tampering.

AI Model Security: Software evaluating AI models for misuse potential and protecting model weights from theft.

AI Application Security: Platforms maintaining the integrity of AI systems and shielding them from misuse. This is a catch-all category for companies that do not clearly fall into the other categories.

But here's how I’ve been thinking about them recently:

Security for AI = Just Security

AI for Security = Just Better Tools

Could you splice these categories differently or add more to these definitions? Almost certainly, but I try to be in the business of clarity over obscurity and complexity, most often expressed in marketing language.

You’ll also notice that I didn’t mention anything about AI Agents. 😱

That’s an intentional omission. I also view AI Agents as a natural, future-state extension of what it means to “do security” (not that any of us can agree on what an “AI Agent” actually is).

Why This Matters

Every security product was adding AI in 2023 and early 2024, because it looked as if "AI Security" would become a standalone category. As AI itself has matured and the capabilities of what is possible have grown, that once-perceived differentiation has started to disappear.

This isn't a bad thing. This is just an evolution of technology in our industry.

The same thing happened with the cloud from 2010-2020, but AI innovation has compressed the timeline. By 2015, every product added "cloud" to its positioning. By 2020, many had quietly dropped it because the cloud had become the default way of doing business.

AI is following the exact same path, just much faster.

The Inversion

Breaking down where the money goes shows the absorption happening:

Year | AI for Security | Security for AI |

|---|---|---|

2023 | $43M (16%) | $221M (84%) |

2024 | $173M (47%) | $191M (53%) |

2025 | $314M (54%) | $268M (46%) |

The crossover happened in 2024.

Money stopped pouring into “Security for AI,” and "AI for Security" grew 624% as every startup that came on the scene did something with AI for security. Even within the “AI for Security” concentration, almost all of that money went into companies trying to improve Security Operations and SOC.

This doesn’t even come close to accounting for the incalculable amount of new and existing funding that has been poured into existing companies for product R&D and wholesale company pivots now involving AI.

“AI Security” funding, broadly speaking, is still growing now, but not because it's special any longer. It's because AI is becoming normal. Tracking "AI Security” will soon be less useful in a broad sense unless it actually secures AI systems, foundation models, etc.

The AI Security umbrella of categories isn’t failing. Quite the opposite, actually. It’s succeeding by disappearing as security follows (or is supposed to follow!) the business and technology waves.